Beyond the Average: Why Traditional Statistics Miss the Mark and How to Fix It

"Uncover the limitations of ordinary least squares and explore robust alternatives for a clearer, more accurate view of your data."

In today's data-driven world, statistical analysis is the bedrock of informed decision-making. From predicting market trends to understanding customer behavior, businesses and researchers alike rely on statistical models to extract meaningful insights from raw data. However, the accuracy and reliability of these insights hinge on the suitability of the chosen statistical methods.

Traditional statistical techniques, such as Ordinary Least Squares (OLS) regression, operate under a set of assumptions about the data. One of the most critical assumptions is that the data is normally distributed and free from outliers. But what happens when these assumptions are violated? What if your data is skewed, contains extreme values, or simply doesn't conform to the idealized normal distribution? In these scenarios, relying solely on OLS can lead to biased results and misleading conclusions.

This article explores the limitations of OLS and introduces robust alternatives that provide a more reliable way to analyze data in the face of real-world complexities. We'll delve into methods that are less sensitive to outliers and non-normality, offering a clearer and more accurate understanding of your data.

The Pitfalls of OLS: When Traditional Methods Fail

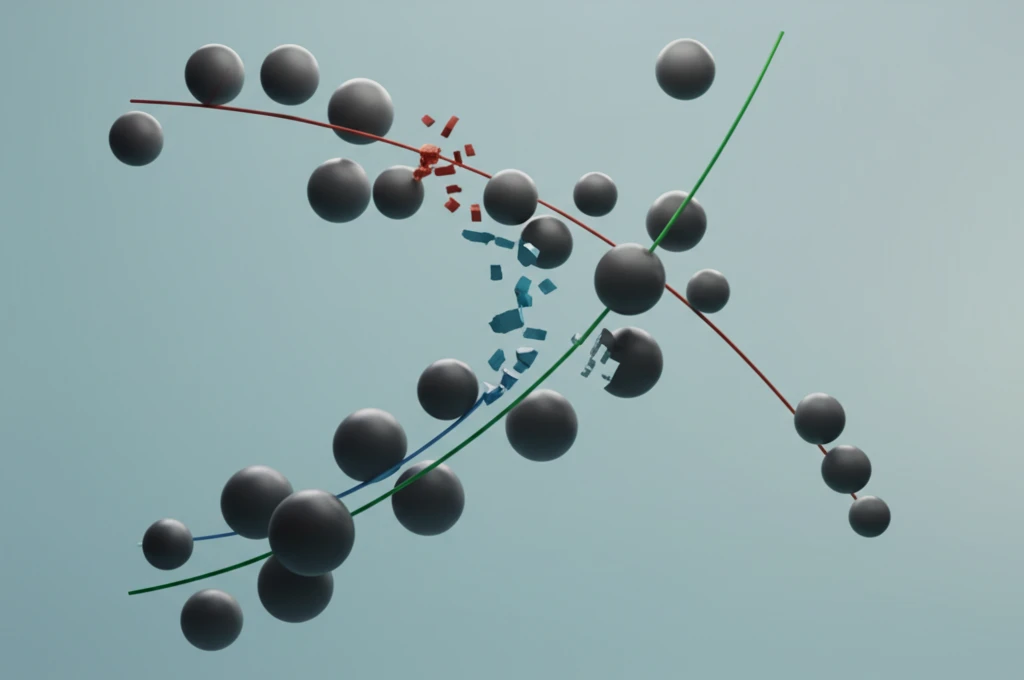

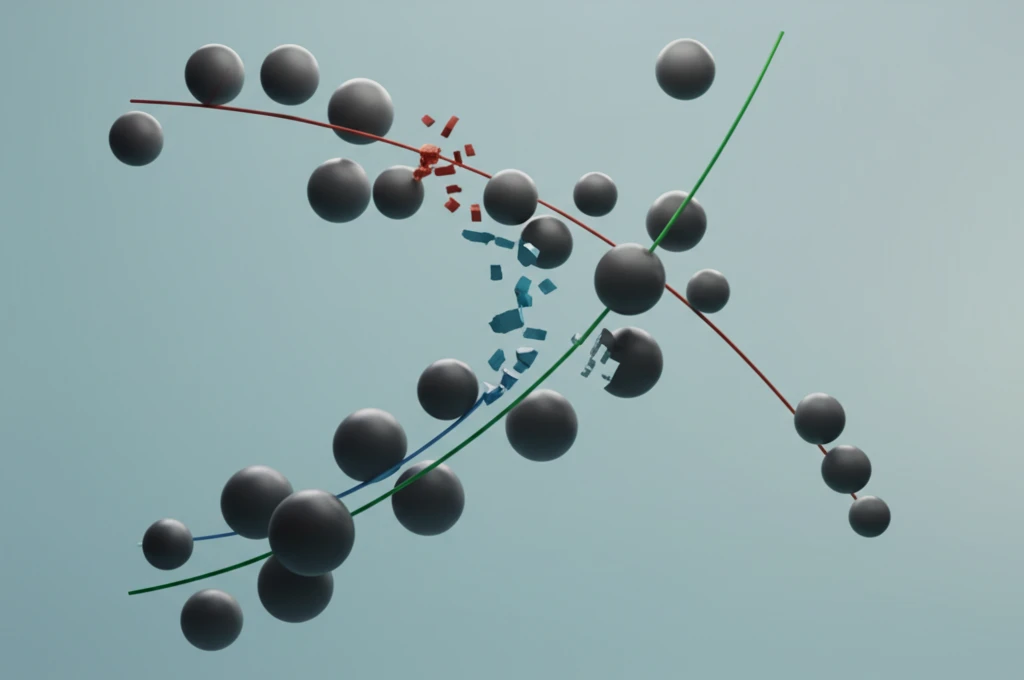

Ordinary Least Squares (OLS) regression is a widely used statistical technique that aims to find the best-fitting linear relationship between a dependent variable and one or more independent variables. OLS works by minimizing the sum of the squared differences between the observed values and the values predicted by the model. However, this method is highly sensitive to extreme values, or outliers, in the data. Even a single outlier can exert a disproportionate influence on the OLS regression line, pulling it away from the true underlying relationship.

- Presence of outliers in the data.

- Non-normal distribution of the data.

- Small sample sizes, where assumptions are harder to verify.

- Data with inherent skewness or potential for extreme values.

Embracing Robustness for Reliable Insights

While OLS remains a valuable tool in many situations, it's crucial to recognize its limitations and consider robust alternatives when dealing with real-world data. By embracing methods that are less sensitive to outliers and non-normality, researchers and businesses can gain a clearer, more accurate, and more reliable understanding of their data, leading to better decisions and more robust insights.